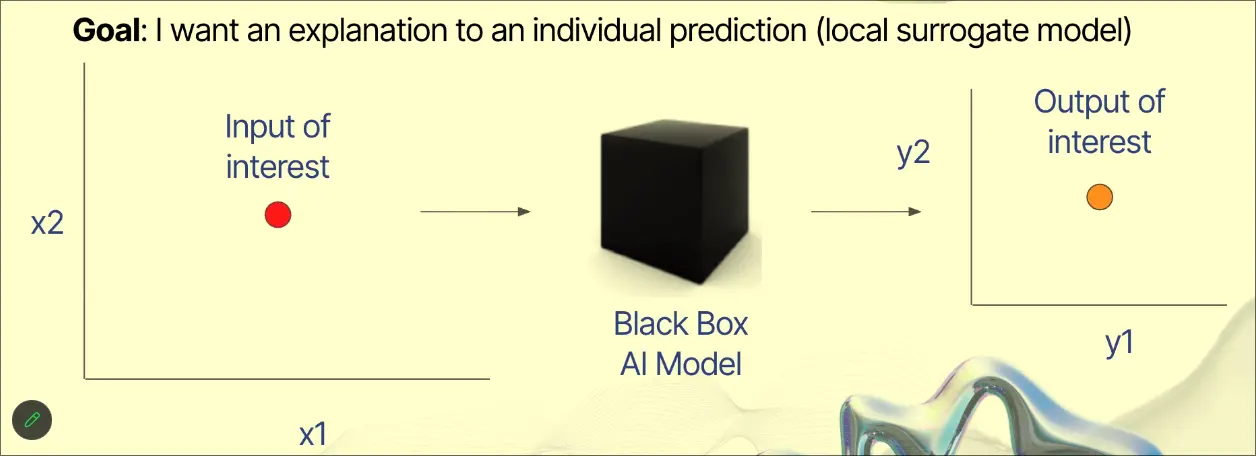

A form of Model Agnostic Interpretability

Finding the local behavior around a prediction of a model.

Definition

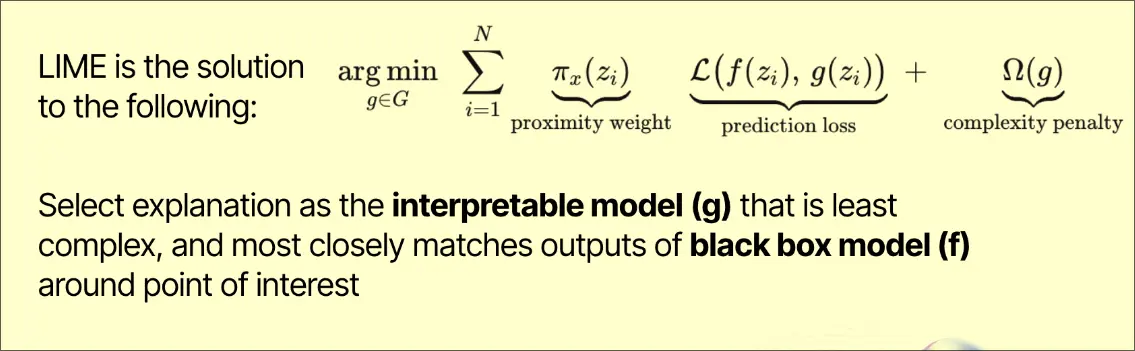

- Proximity weight is how close a ouput datum is to the point of interest

Process

-

Data perturbation - lime generates dataset of perturbed samples

-

Prediction collection - blackbox model makes predictions on perturbed samples

-

Weight assignment - sample closer to original instance receive higher weights

-

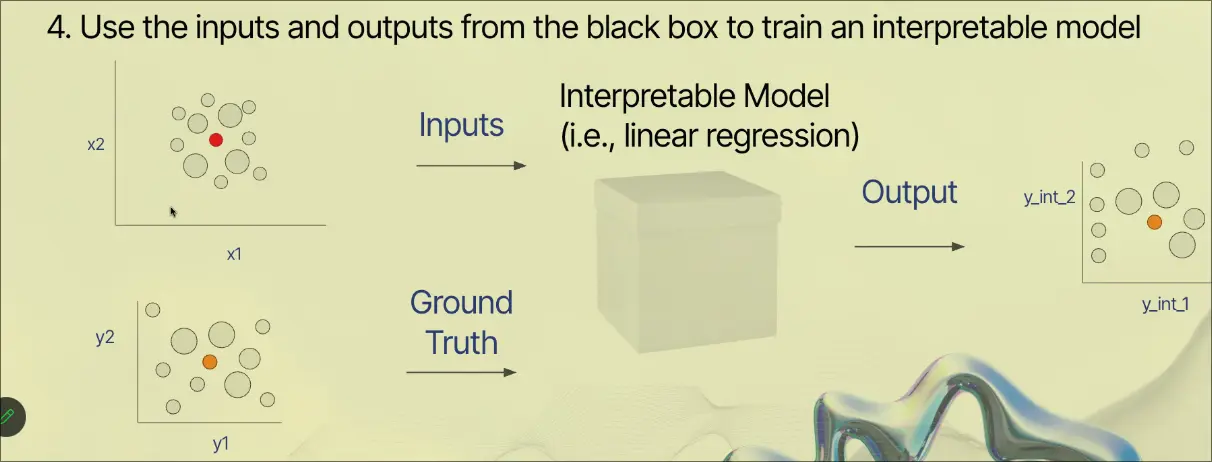

Local model training - interpretable model (like Linear Regression) is trained on weighted dataset

-

Explanation generation - coefficients of local model serve as explanations for original prediction

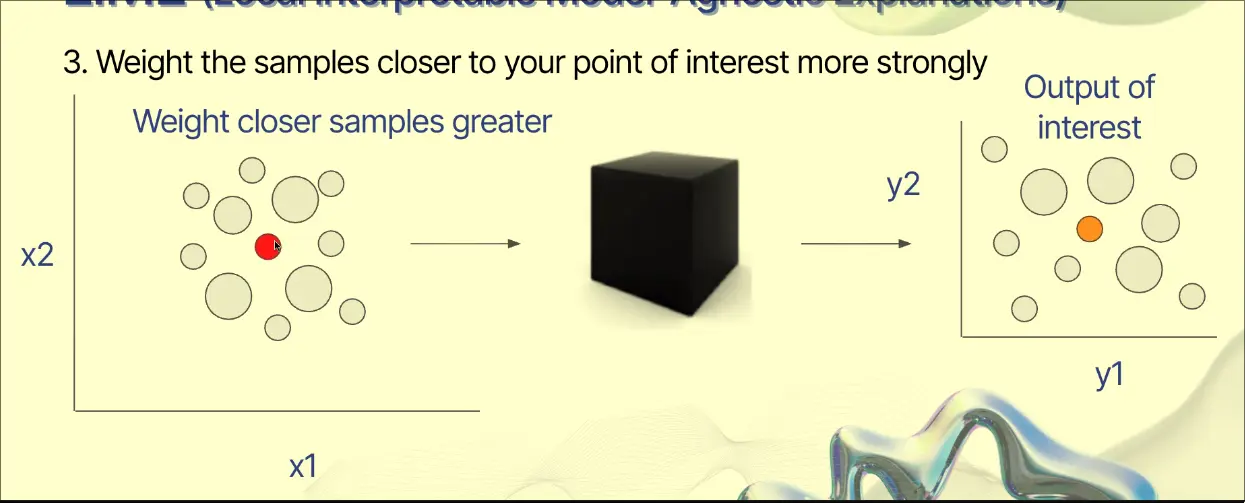

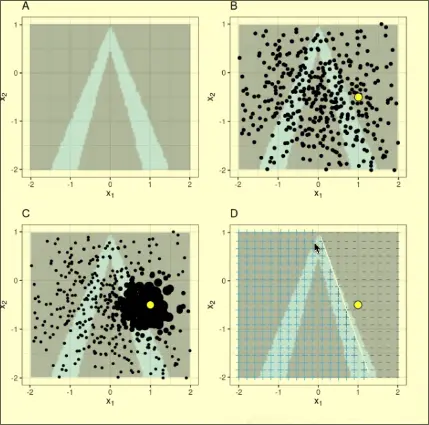

LIME Usage

- We have an initial model that classifies blue from grey

- Scatter a bunch of points

- Weight each point differently depending on how close to point of interest

- Train a new model for that point - this one looks like a grid to determine the blues and greys